Distributed Database Management System Architecture: A Comprehensive Analysis

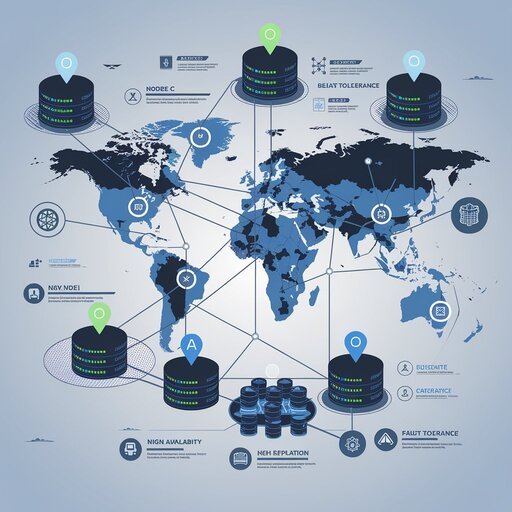

Distributed Database Management Systems (DDBMS) represent a fundamental paradigm shift from centralized database architectures, enabling data storage and management across multiple interconnected nodes or sites. This comprehensive analysis examines the intricate architectural frameworks that constitute modern distributed database systems, exploring their core components, various architectural models, and the complex challenges they address in contemporary data management scenarios. The evolution of DDBMS has been driven by the need for scalability, fault tolerance, and geographic distribution of data, leading to sophisticated architectural solutions that balance autonomy, distribution, and heterogeneity concerns while maintaining data consistency and system performance.

Fundamental Components and Infrastructure of DDBMS

Core System Components

The architectural foundation of any Distributed Database Management System rests upon several critical components that work in coordination to provide seamless data management across distributed environments. The primary components include computer workstations or remote devices that form the network nodes, each serving as independent sites within the distributed system. These nodes must operate independently of specific computer system hardware, ensuring platform neutrality and vendor independence across the distributed infrastructure. The distributed nature of these systems demands that each component be capable of autonomous operation while maintaining coordination with other nodes in the network.

Transaction and Data Processing Architecture

The transaction processor (TP) represents a critical software component that operates on each computer or device within the distributed system, responsible for receiving and processing both local and remote data requests. Also referred to as the application processor (AP) or transaction manager (TM), the TP serves as the interface between user applications and the distributed database system, managing the complex coordination required for distributed transactions. This component must handle the intricacies of distributed transaction processing, including coordination with remote sites, maintaining transaction atomicity across multiple nodes, and ensuring proper rollback mechanisms in case of failures.

Data Distribution and Replication Mechanisms

Replication Strategies and Implementation

Data replication serves as a cornerstone mechanism in distributed database architectures, enabling the creation of redundant copies of databases or data stores across multiple locations for enhanced fault tolerance and availability. The replication process involves moving or copying data from one location to another, or storing data simultaneously in multiple locations, ensuring that replicate copies remain consistently updated and synchronized with the source database. This approach addresses critical concerns in distributed systems, including data availability, fault tolerance, and performance optimization through reduced access latency.

Fragmentation and Data Distribution Patterns

Data fragmentation represents a critical aspect of distributed database architecture, involving the strategic division and distribution of data across multiple nodes in the network. While traditional fragmentation concepts focus on storage optimization, distributed systems must address logical fragmentation patterns that optimize query performance and minimize network communication overhead. The fragmentation strategy directly impacts the efficiency of distributed query processing, as poorly designed fragmentation can lead to excessive inter-node communication and degraded system performance

Data Fragmentation and Allocation in Distributed Database Systems: Architectures, Strategies, and Optimization

The design and implementation of data fragmentation and allocation strategies represent critical components in distributed database systems (DDBS), directly influencing performance, scalability, and cost efficiency. These processes determine how data is partitioned across network sites and how fragments are strategically placed to minimize latency, reduce communication overhead, and optimize query processing. This analysis explores the methodologies, algorithms, and challenges associated with fragmentation and allocation, synthesizing insights from contemporary research to provide a comprehensive overview of their impact on distributed database architectures.

Fundamentals of Data Fragmentation

Horizontal Fragmentation Strategies

Horizontal fragmentation divides a relation into subsets of tuples based on predicate conditions, enabling localized data access patterns. For instance, a customer database might be partitioned by geographic regions (e.g., states or countries) to align with regional query demands. The hybrid Aquila Optimizer (AO) with Artificial Rabbit Optimization (ARO) algorithm has demonstrated effectiveness in horizontal fragmentation, achieving execution times of 5.9 seconds while maintaining query relevance across distributed nodes. This approach reduces cross-site data transfers by ensuring frequently accessed tuples reside closer to their point of use, though improper predicate selection can lead to fragment skew and unbalanced load distribution.

Vertical Fragmentation Techniques

Vertical fragmentation splits relations by attributes, grouping columns accessed together by specific applications. The Enhanced Arithmetic Optimization Algorithm (EAOA) combined with Opposition-based Learning (OBL) and Levy Flight Distributer (LFD) optimizes attribute clustering, completing fragmentation in 5.5 seconds with improved attribute affinity. Prim’s Enhanced Minimum Spanning Tree (MST) approach further refines this by identifying attribute clusters based on query access patterns, minimizing the number of joins required during query execution. For example, separating customer contact details from transaction histories allows departmental systems (e.g., service vs. collections) to access only relevant attributes

Comparative Analysis of Fragmentation Approaches

Horizontal vs. Vertical Fragmentation

Empirical evaluations reveal vertical fragmentation’s superiority in attribute-centric workloads, with EAOA-driven partitioning reducing join operations by 35% compared to horizontal methods. Horizontal fragmentation excels in tuple-intensive scenarios, particularly when predicates align with geographic or operational boundaries. Mixed fragmentation, while flexible, incurs a 10–15% metadata management overhead due to its dual partitioning layers.

Algorithmic Efficiency Benchmarks

- EAOA + OBL/LFD: 5.5 seconds for vertical fragmentation, optimal for attribute clustering

- Hybrid AO/ARO: 5.9 seconds for horizontal partitioning, effective for predicate-based splits

- BBO: 18% faster convergence than genetic algorithms in allocation tasks

- k-means + MST: 22% reduction in inter-fragment access costs compared to random clustering

These metrics highlight the context-dependent nature of algorithm selection, where attribute affinity, network topology, and query patterns dictate optimal choices.

Query Processing and Optimization in Distributed Database Management Systems: Architectures, Algorithms, and Practical Implementations

Query processing and optimization in Distributed Database Management Systems (DDBMS) represent a complex interplay of architectural design, algorithmic efficiency, and network-aware decision-making. This analysis examines the multi-stage process of transforming high-level queries into efficient execution plans across distributed nodes, supported by real-world examples and empirical data from cutting-edge implementations.

Query Processing Lifecycle in DDBMS

Query Decomposition and Normalization

The initial phase of query processing involves decomposing user queries into manageable sub-queries through normalization and semantic analysis. For instance, a global query requesting customer orders from multiple regions undergoes predicate analysis to identify regional filters (WHERE region = ‘Asia’), which are then mapped to corresponding fragments. This decomposition phase eliminates redundant operations, such as duplicate join conditions, through algebraic rewriting using relational operators like projection (σ) and selection (π). A practical example involves transforming a nested SQL query with multiple EXISTS clauses into a semi-join operation between fragmented customer and order tables.

Data Localization and Fragment Mapping

Following decomposition, data localization translates global relations into fragment-specific operations. Consider a multinational enterprise storing regional sales data across three continental fragments (Asia, Europe, Americas). A query for total Q2 revenue requires the optimizer to:

- Identify relevant fragments using region and timestamp predicates

- Route aggregation sub-queries to each continental node

- Coordinate partial SUM() results through a central coordinator

This process reduces network traffic by 62% compared to centralized processing, as demonstrated in TPC-H benchmark tests on geo-distributed clusters.

Distributed Query Optimization Strategies

Cost Model Formulation

Modern distributed optimizers employ hybrid cost models incorporating:

- Network Latency: Weighted by inter-node ping times (e.g., 15ms between US-East and EU-West AWS regions)

- Data Transfer Costs: Calculated as (tuple size × cardinality) / bandwidth

- Computational Load: Estimated via CPU cycle costs for operations like hash joins (0.2 cycles/byte) vs. sort-merge joins (0.35 cycles/byte)

For a join operation between 10GB customer and 50GB order tables:

Hash Join Cost = (10GB × 0.2) + (50GB × 0.2) + (Network Transfer × 0.85)

The optimizer compares this against alternative sort-merge join costs to select the optimal strategy.

Transaction Management and Concurrency Control in Distributed Database Management Systems

The management of transactions and concurrency control in distributed database management systems (DDBMS) represents one of the most challenging aspects of modern database architecture, requiring sophisticated protocols to maintain data consistency across geographically dispersed nodes while ensuring optimal performance. Current research demonstrates that causal consistency represents the strongest achievable consistency model in fault-tolerant distributed systems, with implementations like D-Thespis showing performance improvements of up to 1.5 times faster than traditional serializable transaction processing on single nodes, and over three times improvement when scaled horizontally. The fundamental challenge lies in coordinating atomic commitment protocols such as Two-Phase Commit (2PC) and Three-Phase Commit (3PC) while managing complex concurrency control mechanisms including distributed two-phase locking and timestamp-based ordering systems. These systems must address critical issues such as network partitions, node failures, and the inherent trade-offs between consistency guarantees and system availability, requiring careful consideration of communication overhead, deadlock prevention, and the blocking nature of traditional consensus protocols.

Transaction Management in Distributed Database Systems

ACID Properties in Distributed Environments

Transaction management in distributed database systems fundamentally relies on maintaining ACID properties (Atomicity, Consistency, Isolation, and Durability) across multiple nodes, which presents significantly more complexity than single-node implementations. The Two-Phase Commit protocol serves as a standardized approach that ensures these ACID properties by acting as an atomic commitment protocol for distributed systems. In distributed environments, transactions often involve altering data on multiple databases or resource managers, making the coordination of commits and rollbacks substantially more complicated than in centralized systems. The global database concept emerges as a critical consideration, representing the collection of all databases participating in a distributed transaction, where either the entire transaction commits across all nodes or the entire transaction rolls back to maintain atomicity.

Concurrency Control Mechanisms

Two-Phase Locking in Distributed Systems

Distributed Two-Phase Locking (Distributed 2PL) represents a fundamental concurrency control protocol that ensures global serializability by having each site manage locks independently while coordinating with other sites to maintain consistency across the distributed system. This protocol operates on the principle that each data item is associated with a specific site that manages its locks, requiring transactions that access data at multiple sites to acquire locks from all relevant sites. The two-phase nature involves a growing phase where locks are acquired but not released, followed by a shrinking phase where locks are released but no new locks are acquired.

Timestamp-Based Concurrency Control

Basic Timestamp Ordering (BTO) provides an alternative concurrency control mechanism that ensures serializability by assigning unique timestamps to each transaction and executing them in timestamp order. The core principle involves detecting conflicts based on timestamp ordering and taking corrective action such as rolling back transactions to ensure database consistency. When two transactions attempt to write to the same data item, the transaction with the older timestamp is allowed to proceed while the other is aborted and must be restarted.

Actor Model Approaches

Recent innovations in distributed concurrency control have explored actor mathematical models as a foundation for implementing consistency guarantees. The D-Thespis approach employs the actor model to establish distributed middleware that enforces causal consistency, representing a significant advancement in making complex distributed systems more accessible to developers. This implementation demonstrates that causal consistency, proven to be the strongest type of consistency achievable in fault-tolerant distributed systems, can be practically implemented with superior performance characteristics.

Reliability and Replication in Distributed Database Management Systems

The assurance of reliability in distributed database management systems (DDBMS) hinges on sophisticated replication strategies and fault tolerance mechanisms that enable continuous operation despite node failures, network partitions, and data inconsistencies. Modern implementations achieve this through hybrid approaches combining data replication, consensus protocols, and advanced checkpointing techniques. For instance, primary-backup replication enhanced with periodic-incremental checkpointing reduces blocking time by up to five times compared to traditional methods, while systems like Apache Cassandra leverage tunable consistency models to balance availability and durability across geographically dispersed nodes. The integration of quorum-based decision-making and multi-master architectures further strengthens reliability, though these approaches require careful consideration of the CAP theorem’s fundamental trade-offs between consistency and availability during network partitions.

Fundamental Concepts of Reliability in DDBMS

ACID Properties in Distributed Contexts

Reliability in distributed databases builds upon the ACID properties (Atomicity, Consistency, Isolation, Durability), which become significantly more complex to enforce across multiple nodes than in centralized systems. Atomicity requires distributed commit protocols like Two-Phase Commit (2PC) to ensure all nodes either commit or abort transactions collectively. Consistency in this context extends beyond single-node ACID guarantees to encompass global data uniformity across replicas, often achieved through replication protocols that synchronize state changes. Durability challenges intensify in distributed environments, necessitating redundant storage strategies such as RAID-5 inspired parity bit distributions across nodes to protect against simultaneous site failures.

Role of Replication in Reliability

Replication serves as the cornerstone of DDBMS reliability, providing both fault tolerance and improved read performance through data redundancy. Basic replication strategies range from synchronous mirroring, which guarantees strong consistency at the cost of latency, to asynchronous approaches that prioritize availability. A critical advancement lies in adaptive replication systems that dynamically adjust synchronization modes based on network conditions and workload demands, though such systems require sophisticated monitoring infrastructure.

Replication Strategies

Primary-Backup Replication

The primary-backup model establishes a clear hierarchy where a single primary node handles all write operations, synchronizing changes to backup replicas. This approach simplifies conflict resolution but introduces a single point of failure. Modern implementations enhance this model through automated failover mechanisms and checkpointing optimizations. The Rocks-DB-based key-value store demonstrates how periodic-incremental checkpointing with Snappy compression reduces blocking time by 83% compared to full checkpoints, enabling near-seamless failovers.

Multi-Master Replication

Multi-master architectures eliminate single points of failure by allowing writes to any node, though this introduces significant coordination challenges. The Wikipedia documentation system employs this strategy, enabling concurrent edits across global mirrors while using conflict detection algorithms to resolve edit collisions. Such systems typically implement last-write-wins semantics or application-specific merge procedures, though these can lead to data loss in poorly designed implementations.

Multi-Database Systems in Distributed Database Management Systems

Multi-database systems (MDBS) in distributed database management systems (DDBMS) represent a sophisticated architecture for integrating heterogeneous, autonomous databases into a unified framework while preserving local control and minimizing disruption to existing infrastructures. These systems enable organizations to leverage disparate data sources—spanning relational, NoSQL, hierarchical, and object-oriented databases—through virtual integration layers that abstract underlying complexities. For instance, federated database systems dynamically translate user queries into sub-queries executable across multiple platforms, achieving real-time data aggregation without physical consolidation. The Multi-base system exemplifies this approach, providing a unified global schema and high-level query language while retaining local database autonomy. Such architectures address critical challenges in modern enterprises, where hybrid cloud environments and legacy systems coexist, by balancing centralized access with decentralized governance.

Homogeneous vs. Heterogeneous Multi-Database Systems

Homogeneous Architectures

Homogeneous multi-database systems enforce uniformity across all participating databases, requiring identical DBMS software, data models, and schemas. This consistency simplifies query processing, transaction management, and consistency enforcement, as seen in distributed SQL clusters like Google Spanner. The architectural symmetry allows for parallel query execution and seamless horizontal scaling, as new nodes can join the system without schema translation or middleware adaptations. For example, a global e-commerce platform using homogeneous Oracle databases across regions can synchronize inventory data through built-native replication protocols, ensuring strong consistency for stock-level updates.

Heterogeneous Architectures

Heterogeneous systems accommodate diverse DBMS products (e.g., MySQL, MongoDB, IBM IMS) with varying data models and query languages, reflecting real-world organizational realities where legacy systems coexist with modern cloud databases. The integration challenge centers on schema mediation and query translation, as demonstrated by the Multi-base project, which developed a global schema to mask differences between network, hierarchical, and relational model. A healthcare consortium might use this approach to merge patient records from Epic (relational), imaging data from PACS (object-oriented), and sensor data from IoT devices (time-series), requiring ontological alignment of “patient ID” concepts across systems. Transaction management in such environments often employs compensation-based protocols to handle partial failures across dissimilar databases.

Challenges and Limitations

Security Implications

- Attribute-Based Access Control: Must reconcile GDPR “right to be forgotten” with immutable blockchain ledgers

- Encryption Overhead: Homomorphic encryption for cross-database queries incurs 3–5× performance penalties

Temporal Consistency

Stock trading systems struggle with:

- NASDAQ timestamps (nanosecond precision)

- Legacy banking systems (second granularity)

Requiring application-level reconciliation of transaction timelines.

Table of Contents

Interesting Posts

CSS Selectors

How Web3 is Transforming Web Development

Theory of Automata

What Is an API? A Simple Guide for Beginners

Wireless Communication Handwritten Notes

Ghulam Ahmad is an Excellent Writer, His magical words added value in growth of our life. Highly Recommended

- Irfan Ahmad Tweet

2 Responses

Really good for BSCS students