What Is Data Mining?

Data mining has become an essential tool in today’s data-driven world, helping organizations extract valuable insights from vast amounts of information. If you’re new to the concept, this comprehensive guide will walk you through what data mining is, why it matters, and how it differs from regular data analysis.

Understanding Data Mining: Definition and Core Concepts

Data mining is the process of discovering interesting, unexpected, or valuable knowledge, such as patterns, associations, changes, anomalies, and significant structures, from large amounts of data stored in databases, data warehouses, or other information repositories. It involves examining information from different perspectives and compressing it to extract relevant data that can be utilized to build insights[9].

Often treated as synonymous with knowledge discovery in databases, data mining is actually considered by many researchers as an essential step within the broader knowledge discovery process. This distinction is important as it places data mining within a larger methodological framework.

The Data Mining Process: How Does It Work?

Data mining isn’t a single-step operation but part of a larger knowledge discovery process. This process typically consists of several iterative steps:

1. Data Integration

Multiple, heterogeneous data sources may be integrated into a single repository[1]. This step ensures that all relevant data is available for mining.

2. Data Selection

Relevant data for the analysis task is retrieved from the database[1]. This focuses the mining effort on the most pertinent information.

…………..

Understanding Decision Trees and Statistical Techniques

Decision trees stand as one of the most interpretable and widely used machine learning models for classification tasks. Their ability to mimic human decision-making processes through hierarchical splitting rules makes them indispensable in fields ranging from healthcare to finance. This comprehensive exploration delves into the mechanics of decision trees, the statistical techniques underpinning their construction, and their practical applications in modern data science.

The Anatomy of Decision Trees

A decision tree is a supervised learning algorithm that recursively partitions a dataset into subsets based on feature values, aiming to create homogeneous groups concerning the target variable. The structure consists of three primary components:

- Root Node: The initial feature split that divides the dataset.

- Internal Nodes: Subsequent splits based on feature conditions.

- Leaf Nodes: Terminal nodes predicting the final class label.

Core Statistical Techniques in Tree Construction

1. Splitting Criteria: Gini Impurity vs. Information Gain

The choice of splitting criterion fundamentally shapes a tree’s structure:

Gini Impurity: Measures the probability of misclassifying a randomly chosen element. The CART (Classification and Regression Tree) algorithm employs this metric, seeking splits that minimize impurity. For a binary class problem, Gini impurity $ G $ is calculated as:

G = 1 – (p_0^2 + p_1^2)

Neural Networks

Artificial Neural Networks (ANNs): The Foundation

Artificial Neural Networks (ANNs) are computational models inspired by biological neural networks, designed to process information through interconnected nodes called artificial neurons. These neurons are organized into layers—input, hidden, and output—and communicate via weighted connections. The strength of these connections adjusts during training, enabling ANNs to learn patterns from data.

Feedforward Neural Networks (FNNs): Simplicity and Efficiency

Feedforward Neural Networks (FNNs) are the simplest type of ANN, characterized by unidirectional data flow from input to output layers without feedback loops They form the basis for more complex architectures like CNNs and RNNs.

A Beginner’s Guide to Clustering: How the K-Means Algorithm Works

Clustering is a fundamental technique in unsupervised machine learning that groups similar data points together, helping uncover hidden patterns in unlabeled datasets. Among various clustering methods, the K-Means algorithm stands out for its simplicity and effectiveness. This guide breaks down the mechanics of K-Means, its practical implementation, and key considerations for beginners.

What Is K-Means Clustering?

K-Means is an iterative partitioning algorithm that divides a dataset into K distinct, non-overlapping clusters. Each cluster is represented by its centroid—the arithmetic means of all points in the cluster. The algorithm minimizes the Within-Cluster Sum of Squares (WCSS), calculated as:

………

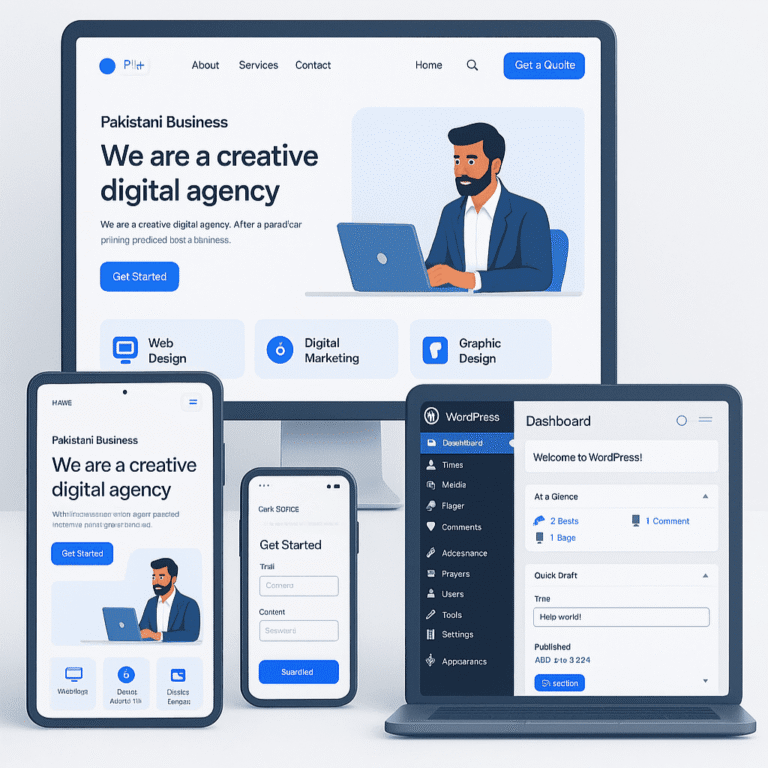

Web Mining: Techniques, Applications, and Ethical Considerations

Web mining represents the convergence of data mining methodologies and web-based data analysis, enabling organizations to extract actionable insights from vast digital ecosystems. By systematically analyzing content, structure, and usage patterns across websites and applications, this discipline has become fundamental to modern digital strategies.

Foundations of Web Mining

Definition and Scope

Web mining applies data mining techniques to web-based data sources, including text, multimedia, hyperlink networks, and user interaction logs[1][2]. Unlike traditional data mining confined to structured databases, web mining handles heterogeneous data formats and dynamic content updates characteristic of online environments[3][4]. The discipline divides into three core domains:

Text Mining vs Web Mining: What’s the Difference and Why It Matters

In the era of big data, extracting meaningful information from the vast digital universe is crucial for businesses, researchers, and decision-makers. Two prominent data mining fields that often get confused are Text Mining and Web Mining. While both deal with extracting knowledge from textual data, they differ significantly in scope, techniques, and applications. Understanding these differences is essential for choosing the right approach to your data challenges.

What Is Text Mining?

Text mining, also known as text data mining or text analytics, focuses on extracting useful information from unstructured text documents. These documents can be emails, reports, social media posts, PDFs, or any text-based data. The goal is to convert unstructured text into structured data that can be analyzed for patterns, trends, or insights.

Solving Optimization Problems with Genetic Algorithms in Data Mining

Optimization plays a pivotal role in data mining, where the goal is to extract meaningful patterns, build accurate predictive models, and efficiently manage large datasets. Genetic Algorithms (GAs), inspired by the principles of natural selection and genetics, have emerged as a powerful evolutionary optimization technique widely applied in data mining tasks. This blog explores how GAs work, their advantages in solving optimization problems in data mining, and real-world applications demonstrating their effectiveness.

What Are Genetic Algorithms?

Genetic Algorithms are search heuristics that mimic the process of natural evolution. They iteratively evolve a population of candidate solutions to optimize a given objective function. The core operators of a GA include:

- Selection: Choosing the fittest individuals based on a fitness function.

- Crossover (Recombination): Combining parts of two parent solutions to create offspring.

- Mutation: Introducing random changes to offspring to maintain genetic diversity.

By repeatedly applying these operators, GAs explore the solution space globally, balancing exploration and exploitation to avoid local optima and discover near-optimal solutions.

Fuzzy Logic in Data Mining: Making Smart Decisions with Uncertain Data

In today’s data-driven world, uncertainty and imprecision are inherent in many datasets. Traditional data mining techniques often struggle when faced with vague, ambiguous, or incomplete information. This is where fuzzy logic shines, providing a mathematical framework that mimics human reasoning by handling uncertainty and partial truths effectively. This blog explores the role of fuzzy logic in data mining, its advantages, and practical applications that empower smarter decision-making with uncertain data.

What Is Fuzzy Logic?

Introduced by Lotfi Zadeh in 1965, fuzzy logic extends classical binary logic by allowing truth values to range between 0 and 1, rather than being strictly true (1) or false (0). This enables the representation of degrees of membership in sets, reflecting real-world situations where boundaries are not clear-cut.

For example, instead of classifying a temperature as simply “hot” or “not hot,” fuzzy logic allows it to be “somewhat hot” with a membership degree of 0.7, capturing nuances that traditional logic misses

Why Fuzzy Logic Matters in Data Mining

Data mining often deals with complex, noisy, and heterogeneous data where crisp classifications or rigid rules fall short. Fuzzy logic complements data mining by:

Fuzzy Set Operations

| Operation | Symbol | Definition | Example (μA = 0.6, μB = 0.8) | Result |

|---|---|---|---|---|

| Union | A ∪ B | max(μA(x), μB(x)) |

max(0.6, 0.8) |

0.8 |

| Intersection | A ∩ B | min(μA(x), μB(x)) |

min(0.6, 0.8) |

0.6 |

| Complement | ¬A | 1 − μA(x) |

1 − 0.6 |

0.4 |

| Bold Union | A ⊕ B | min(1, μA(x) + μB(x)) |

min(1, 0.6 + 0.8) |

1.0 |

| Bold Intersection | A ⊗ B | max(0, μA(x) + μB(x) − 1) |

max(0, 0.6 + 0.8 − 1) |

0.4 |

| Equality | A ≡ B | 1 − |μA(x) − μB(x)| |

1 − |0.6 − 0.8| |

0.8 |

Table of Contents

Interesting Posts

Random Color Generator Project

HTML Forms

How Web3 is Transforming Web Development

BSCS 3rd Semester Notes

Ghulam Ahmad is an Excellent Writer, His magical words added value in growth of our life. Highly Recommended

- Irfan Ahmad Tweet

2 Responses